Dependent Tests in Test Automation

Preface

To may surprise, dependent tests are a common problem in test automation. More precisely, I am surprised that a lot of people do not realize why having dependent tests is a problem. And sometimes they are even proud of having them.

It is quite easy to create them, and it is not always easy to detect them. In this article, I will show you how dependent tests can break your test automation and how to avoid it.

Before we actually start, I’d like to share with you one thought. Some time ago, I don’t remember where I read it, but it stuck in my head. It was about the difference between a Junior and a Senior developer. They both can write shitty code, but the difference is that a Senior developer knows that his code is shitty. The same applies to testware. You might already have dependent tests, but you should know that they are shitty.

What are dependent tests?

Usually, we think of dependent tests as tests that depend on each other and can only be executed in a certain order. But I suggest looking at it more broadly.

Dependent test is not a self-contained test. It relies on circumstances that are not under its control.

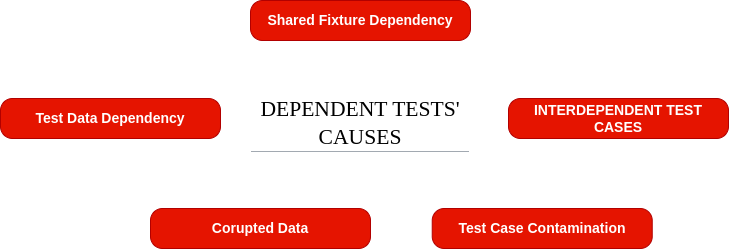

This definition is quite broad, so let’s break it down into more specific cases.

-

Shared fixture dependency. Tests fail because the initial conditions/environment have been corrupted by another test and were not cleaned up properly. E.g., you browser context is not isolated/reused by multiple test scenarios and has some cookie left after your previous tests.

-

Interdependent test cases. It’s probably the most common one. This occurs when a test case relies on the state or data modified by another test case or script, leading to unpredictable and unreliable test results. Test cases depend on each other, that is they can only be executed in a fixed sequence As example a previous test failed and left the system “dirty” so that all successive tests also failed

-

Test case Contamination. This is a special case of dependent test cases. Test fail because you choose wrong approach for doing assertions. You do perform SENSITIVE COMPARE where it is not required so changes that have nothing to do with your test case affect the results. It often happens when you migrate manual test cases to automation and do not change the approach. Some steps in manual test cases are often repeated, and you do not pay attention to them.

-

Test data dependency. Multiple tests share the same test data, and changes to one test’s data affect the others. Data inconsistencies can lead to test failures and make it difficult to isolate issues. For example, you use the same user for multiple tests. Actions performed in one test can affect all other tests. It will be even worse while running tests in parallel.

-

Corrupted data. Tests fail because the data has been corrupted or changed by another application/user E.g., you want to perform a simple check and don’t want to prepare data since it takes many times more than the test. You rely fully or partially on existing data on particular environment. But at one moment this data properties were changed by random employee or another application.

Some of these cases are more common than others, but all of them are bad. They make your test automation fragile and unreliable.

And in fact, there is a rule that can help you to avoid dependent tests.

A truly independent test can be executed in any order, individually or as part of a group, in any environment, and at any time, consistently producing the same result.

Why are dependent tests so bad?

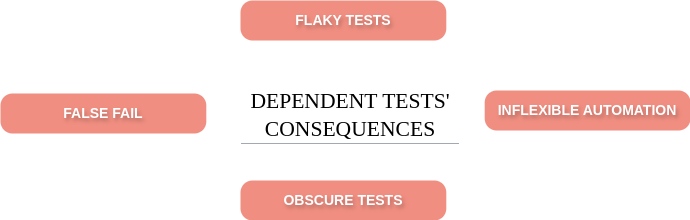

I agree, just saying that dependent tests are bad is not enough. Let’s see what consequences they can have.

-

False failures. The tests fail not because of errors in the SUT, but because of errors in the test automation testware or environment issues. False failures are the most dangerous type of failures because they can lead to a loss of trust in the test automation. In addition, they can lead to a waste of time and resources spent on investigating the issue.

-

Flaky tests. Flaky tests are tests that sometimes pass and sometimes fail without any changes in the SUT or testware. Flaky tests are a nightmare for test automation engineers because they are unpredictable.

-

Inflexible automation. Dependent tests are hard to maintain and refactor. You cannot change one test without affecting others. You cannot run tests in parallel because they will interfere with each other. You cannot run single tests because they rely on the state of the system after the previous tests. You cannot run tests in any order because they depend on each other. You cannot run tests in any environment because they are dependent on the existing data.

-

Obscure tests. Dependent tests are hard to understand. You need to know the state of the system after each test to understand the next one. You need to know the order of the tests to understand the results. It decreases the readability of the testware. It is like reading a book from the middle.

There are other consequences, but these are the most common ones. I guess even these are enough to convince you that dependent tests are bad.

How to avoid dependent tests?

I hope that question is already in your head.

Let’s recall the rule I mentioned earlier.

A truly independent test can be executed in any order, individually or as part of a group, in any environment, and at any time, consistently producing the same result.

What questions should you ask yourself to ensure that your tests are independent?

- Can I run this test in isolation (without any other tests)?. If the answer is no, then your test is dependent.

- Can I run this test in any order?. If the answer is no, then your test is dependent.

- Can I run this test in any environment?. If the answer is no, then your test is dependent.

- Can I run this test at any time?. If the answer is no, then your test is dependent. (I have to admit that this question is not always applicable because some tests are time-dependent by nature)

Let’s consider causes we mentioned earlier and how to avoid them.

-

Shared fixture dependency. Use the setup/teardown mechanism to ensure that the initial conditions are always the same. If we talk about e2e tests, use new browser context for each test scenario.

In Playwright it’s implemented under the hood, so you don’t need to worry about it. I recommend using Playwright for e2e tests. Since it was built with the idea of isolation in mind.

-

Interdependent test cases. Avoid using the state of the system after the previous tests. Use the setup/teardown mechanism to ensure that the initial conditions are always the same. Basically, this rule is easy to follow if you follow the previous one.

Again, if you are using Playwright, you can use custom fixtures to ensure that the initial conditions are always the same.

-

Test data dependency. Use unique data for each test. Use libraries like Faker to generate random data. In most cases if you share the same data between tests, it means that you rely on the particular order of tests.

Of course, there might be some cases when you need to use the same data according to the business logic. But in fact, these cases are rare.

-

Corrupted data. Do not rely on existing data if it’s possible. And from practical experience, it’s almost always possible. Prepare the data you need for the test. It might take some time, but it will save you a lot of time in the future.

Playwright fixtures and project dependencies can help you to prepare the data you need for testing.

-

Test case Contamination. Use the right approach for doing assertions. Do not use sensitive compare where it is not required. If you are not sure if you should do validation for something, ask yourself if it is related to your test case. When you check if “a user is able to add a product to the cart” , you don’t need to check if items are sorted by price. When you check if “a user is able to login” you don’t need to check all the elements on the page.

If any assertion not related to the test case objective fails before the main assertion, you are not able to say if the main assertion is correct or not because the test is yet failed. Of course, you can use soft assertions, but if you already use them, you probably know why you need them.

Conclusion

Dependent tests are a common problem in test automation. They make your test automation fragile and unreliable. They can lead to false failures, flaky tests, inflexible automation, and obscure tests.

To avoid dependent tests, you should follow the rule that a truly independent test can be executed in any order, individually or as part of a group, in any environment, and at any time, consistently producing the same result.

We all know that independent tests are hard to achieve, but it’s worth the effort. Independent tests are easier to maintain, refactor, and understand. Also, independent tests might take more time while running, but they will save you a lot of time in the future. Besides that, it is easier to speed up your tests than troubleshooting dependent tests.

Trust me on this one. I’ve been there.

Thanks for reading!